Magic: Creating Self-correction Standard For In-context Text-to-sql Observations in the clinical neighborhood are typically carried out in well-regulated settings to reduce the impact of aspects like the researchers' own presumptions and the randomness of their surroundings. Offering open accessibility to study procedures and outcomes and encouraging critical analysis of findings are needed conditions for the clinical area (Beyerstein, 1995). Partial searches, like "4 pe", can match "four petals" since most keyword online search engine allow for prefix browsing, which allows the vital as you type feature, where a customer can see search engine result or see query ideas as they type. Hence, a "blue" question can return "azure" flowers, if you explicitly inform the engine that "blue" and "azure" are synonyms. It's still something that is pretty attainable via artificial intelligence, at the very least in our experience, partially even if we have a lot of identified data that we can train off of. And then we kind of adjust our limits after that to represent any differences that we could see in efficiency.

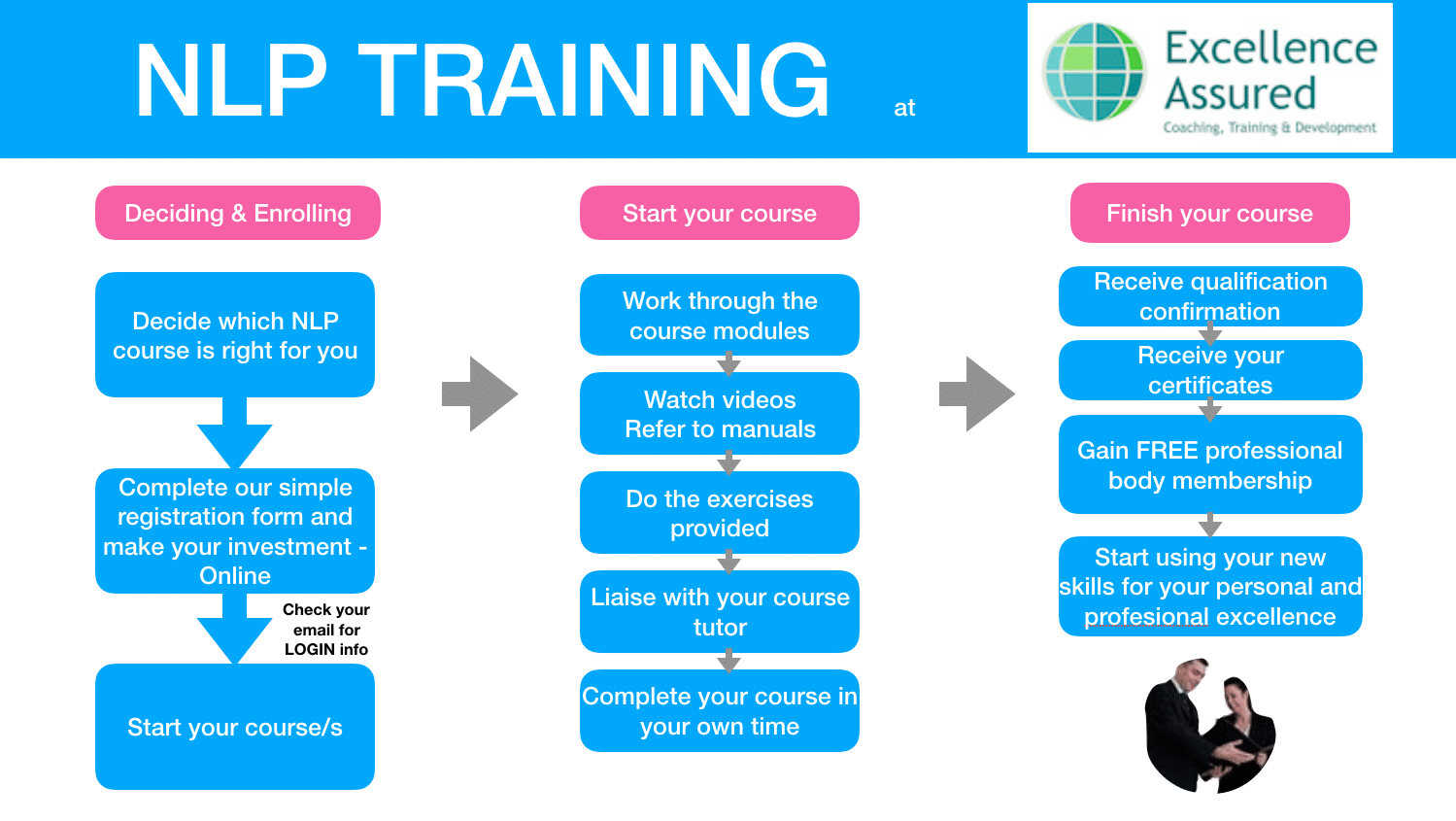

- Section 3 unboxes Meta programs from the basic Meta program filters to the most complex Meta program filters and introduces making profound changes by changing Meta programs and simply exactly how easy it can be.That would certainly indicate that we would be abstracting around 125,000 individual graphes in order to locate 50 of these patients, which is essentially prohibitively pricey and we would possibly never ever do that.Once the efficiency is good that's when we release it, and the last action is to keep an eye on the efficiency of the model over time.Yet that presumes instructors will be as cognisant of the present NLP research study as a developmental psycho therapist would remain in their area.Or a lot more essentially, just how to also differentiate what a block is based upon the patterns of light hitting my eye.However, it's actually really very easy to collect countless pictures where people have already recognized who remains in those images.

The Keyword Search Algorithm

So I intend to spend a bit of time on a few of the concepts that lead every one of the work that we do in this room. And afterwards we'll jump into concrete examples illustrating these principles. The very first is that machine learning will equip humans, not replace them. And I'm going to dive into each of these in a little much more depth.Not The Response You're Seeking? Browse Various Other Inquiries Identified Pythonnlpnltk Or Ask Your Own Question

So that's why at Flatiron we take the strategy of technology-enabled abstraction. We utilize technology to do the things that modern technology is great at, however we couple it with expert scientific abstracters, who can apply that distinct human experience to do that abstraction. So one item of this innovation is abstraction, like the abstraction laboratory that you can see outside and explore. Another item of that technology is machine learning which we'll discuss. As I pointed out, among the most typical tools in this space is artificial intelligence, and it picks up from many examples how to behave, yet if it encounters a new example, many equipment discovering versions do not really recognize what to do keeping that, since it does not have experience with that. Well, people on the other hand can use common-sense thinking to identify exactly how to solve that obstacle, or they can just elevate their hand and state, "I do not know what this is. Allow me go talk with someone who does."Natural Language Processing

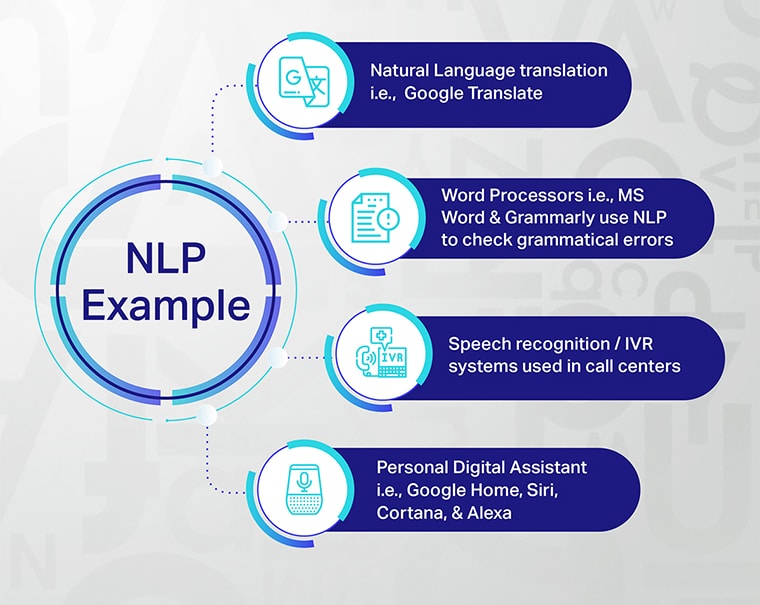

We located that while self-correction boosts performance across all data sources, some data sources benefit a lot more from MAGIC's standards. This variability can be as a result of the capability of the LLM, the varying degrees of challenging details in the data sources, or various other elements. This monitoring might inspire the growth of guidelines that are adjusted to difficulty of database in the future. Human language is filled with obscurities that make it challenging to create software program that accurately determines the designated meaning of text or voice data. Search phrase search modern technology, tied with an extra AI-driven technology, including NLU (natural language understanding) and vector-based semantic search, can take search to a new level. The books material will certainly not only move the newbie right into brand-new heights of professional requirements, it will certainly additionally include in the continual expertise of the specialist experts of hypnosis seeking further development and growth. Globe renowned Master Trainer of Neuro Linguistic Programs (NLP) and professional leader in the area of NLP, Hypnotherapy, NLP Training and Time Line Therapy ®, presents a flawless account of Time Line Treatment ® Techniques at an initial degree exceeded by none. Adriana has actually created a starting point that starts an uplifting trip with solid foundations for growth and development that makes clear and establishes an ongoing water fountain of quality that springs forth with a circulation of effortless understanding for the reader. Programmingrelates to the means our experiences and finding out are coded into our life, i.e.as habitual patterns similar to how software runs computer. The concept that PRS can be identified by very closely observing the person's eye activities was likewise disproved. In a similar way, Dorn et al. (1983) ended from their review that no demonstrably dependable approach existed https://canvas.instructure.com/eportfolios/3020428/elliotxjzd056/Exactly_How_To_Overcome_Resistance_To_Alter for evaluating the proposed PRS. After taking a look at the possibility of NLP use by the USA Military, the Army Research Study Institute (ARI) involved the exact same final thought. There wants evidence to sustain NLP's efficiency (Swets & Bjork, 1990). Nonetheless, the wide ideas that NLP is built on, and the absence of a formal body to check its use, suggest that the methods and quality of technique can differ substantially. We additionally assume that having a truly excellent proxy for person efficiency status will certainly permit us to move beyond the constraints of ECOG. And as we have actually all seen from the papers there's been significant progression of artificial intelligence in the imaging space, and these are things that we would certainly love to be able to take these innovations and include them into our items. In order to find the individuals with this positive biomarker standing we would need to go into the person graph and search for these, because there's not necessarily an organized field in our EHR that records this information. Pseudoscience commonly has origins in superstitious techniques of the past, yet their supporters will certainly often play down this fact in favor of representing their field as based in rigorous scientific questions (Beyerstein, 1995; Lilienfeld et al., 2014). A 2010 evaluation paper sought to examine the study findings connecting to the concepts behind NLP. Of the 33 included research studies, only 18 percent were found to support NLP's underlying concepts. So to summarize, considering that we released this version we had the ability to save 5,000 hours of abstraction just from this usage situation alone. Since we utilized it we had the ability to turn around this job in a month, which was truly interesting for us. And due to this we assume it will certainly be a vital device for us as we maintain every one of our core registries up to day as the standard of treatment adjustments.Exactly how precise is NLP?

The NLP can extract particular meaningful concepts with 98% precision.